Designing live human-AI partnerships in musical improvisation (GenAICHI 2024)

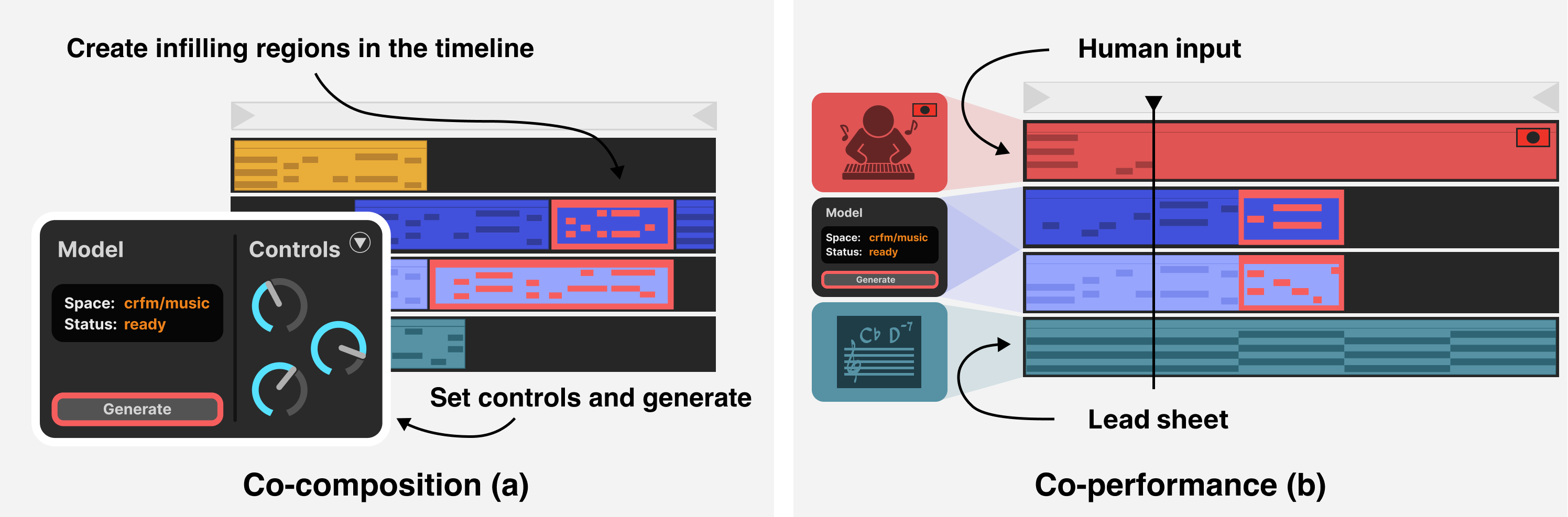

Recent progress in generative models for music has highlighted the need for interactive, controllable systems that service the goals of musicians. In this paper, we introduce a system that integrates a compositional assistant and live improvisational partner directly into the modern music producer's toolkit--the digital audio workstation. Our system design is guided by 1) integration with modern music production software, 2) non-linear songwriting workflows, and 3) enabling generative AI to support live improvisation tasks. We find that anticipatory transformer models are well suited for these goals, and present a method for adapting an anticipatory model for live improvisation. We call on future work to further explore human-AI co-performance by designing systems to be accessible and integrated into the workflows of domain experts.